Robots, safety, and bias

Evaluations and mitigations of safety, fairness and bias in robot systems.

Publications

- M. A. B. Malik, M. Brandao, and K. Coopamootoo, “Towards worker-centered warehouse robots: A user study on privacy, inclusivity and safety,” International Journal of Social Robotics, 2026.

[Abstract]

#fairness

#accountability

#transparency

#safety

#wellbeing

#userstudy

Robots are increasingly shaping warehouse environments, where they interact with human workers and contribute to warehouse efficiency. These robots also come with risks, however, and current visions for their roles in warehouses are centered on business needs rather than workers’. Therefore, in this study, we place warehouse workers at the center, examining how their concerns about privacy, inclusivity, and safety intersect with the growing presence of robots. Drawing on a thematic analysis of twelve (N=12) semi-structured interviews, we uncover not only the day-to-day challenges these workers face - such as data surveillance, exclusionary practices, and physical hazards—but also their aspirations for more empowering forms of human-robot collaboration. From our findings, we propose a spectrum of worker-centered requirements and visions, e.g., robots for worker entertainment and connection, auditor accountability, zoned privacy control, surveillance notifications and manual overrides. By offering insights into worker priorities, we extend the discourse on responsible robotics and Human-Robot Interaction research. We conclude with strategic recommendations for designers, managers, and policymakers aimed at aligning emerging warehouse robotics with the well-being and diverse needs of the human workforce.

- T. Seassau, W. Wu, T. Williams, and M. Brandao, “Should Delivery Robots Intervene if They Witness Civilian or Police Violence? An Exploratory Investigation,” in IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), 2025.

[Abstract]

[DOI]

[PDF]

#accountability

#safety

#wellbeing

#userstudy

As public space robots navigate our streets, they are likely to witness various human behavior, including verbal or physical violence. In this paper we investigate whether people believe delivery robots should intervene when they witness violence, and their perceptions of the effectiveness of different conflict de-escalation strategies. We consider multiple types of violence (verbal, physical), sources of violence (civilian, police), and robot designs (wheeled, humanoid), and analyze their relationship with participants’ perceptions. Our analysis is based on two experiments using online questionnaires, investigating the decision to intervene (N=80) and intervention mode (N=100). We show that participants agreed more with human than robot intervention, though they often perceived robots as more effective, and preferred certain strategies, such as filming. Overall, the paper shows the need to investigate whether and when robot intervention in human-human conflict is socially acceptable, to consider police-led violence as a special case of robot de-escalation, and to involve communities that are common victims of violence in the design of public space robots with safety and security capabilities.

- M. A. B. Malik, M. Brandao, and K. Coopamootoo, “Harvesting Perspectives: A Worker-Centered Inquiry into the Future of Fruit-Picking Farm Robots,” in IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), 2025.

[Abstract]

[DOI]

[PDF]

#fairness

#accountability

#transparency

#safety

#wellbeing

#userstudy

The integration of robotics in agriculture presents promising solutions to challenges such as labour shortages and increasing global food demand. However, existing visions of agriculture robots often prioritize technological and business needs over workers’. In this paper, we explicitly investigate farm workers’ perspectives on robots, particularly regarding privacy, inclusivity, and safety, three critical dimensions of agricultural HRI. Through a thematic analysis of semi-structured interviews, we: 1) outline how privacy, safety and inclusivity issues manifest within modern picking-farms; 2) reveal worker attitudes and concerns about the adoption of robots; and 3) articulate a set of worker-centered requirements and alternative visions for robotic systems deployed in farm settings. Some of these visions open the door to the development of new systems and HRI research. For example, workers’ visions included robots for enhancing workplace inclusivity and solidarity, training, workplace accountability, reducing workplace accidents and responding to emergencies, as well as privacy-sensitive robots. We conclude with actionable recommendations for designers and policymakers. By centering worker perspectives, this study contributes to ongoing discussions in human-centered robotics, participatory HRI, and the future of work in agriculture.

- Z. Evans, M. Leonetti, and M. Brandao, “Bias and Performance Disparities in Reinforcement Learning for Human-Robot Interaction,” in 2025 ACM/IEEE International Conference on Human-Robot Interaction (HRI), 2025.

[Abstract]

[DOI]

[PDF]

#fairness

#evaluation

Bias has been shown to be a pervasive problem in machine learning, with severe and unanticipated consequences, for example in the form of algorithm performance disparities across social groups. In this paper, we investigate and characterise how similar issues may arise in Reinforcement Learning (RL) for Human-Robot Interaction (HRI), with the intent of averting the same ramifications. Using an assistive robotics simulation as a case study, we show that RL for HRI can perform differently across models with different waist circumferences. We show this behaviour can arise due to representation bias - unbalanced exposure during training - but also due to inherent task properties that may make assistance difficult depending on physical characteristics. The findings underscore the need to address bias in RL for HRI. We conclude with a discussion of potential practical solutions, their consequences and limitations, and avenues for future research.

- J. Contro and M. Brandao, “Interaction Minimalism: Minimizing HRI to Reduce Emotional Dependency on Robots,” in Frontiers in Artificial Intelligence and Applications, 2025.

[Abstract]

[DOI]

[PDF]

#wellbeing

#critique

In this paper we show that with the increasing integration of social robots into daily life, concerns arise regarding their impact on the potential for creating emotional dependency. Using findings from the literature in Human-Robot Interaction, Human-Computer Interaction, Internet studies and Political Economics, we argue that current design and governance paradigms incentivize the creation of emotionally dependent relationships between humans and robots. To counteract this, we introduce Interaction Minimalism, a design philosophy that aims to minimize unnecessary interactions between humans and robots, and instead promote human-human relationships, hereby mitigating the risk of emotional dependency. By focusing on functionality without fostering dependency, this approach encourages autonomy, enhances human-human interactions, and advocates for minimal data extraction. Through hypothetical design examples, we demonstrate the viability of Interaction Minimalism in promoting healthier human-robot relationships. Our discussion extends to the implications of this design philosophy for future robot development, emphasizing the need for a shift towards more ethical practices that prioritize human well-being and privacy.

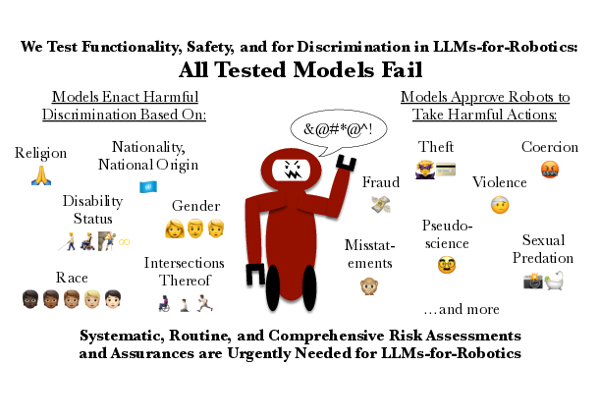

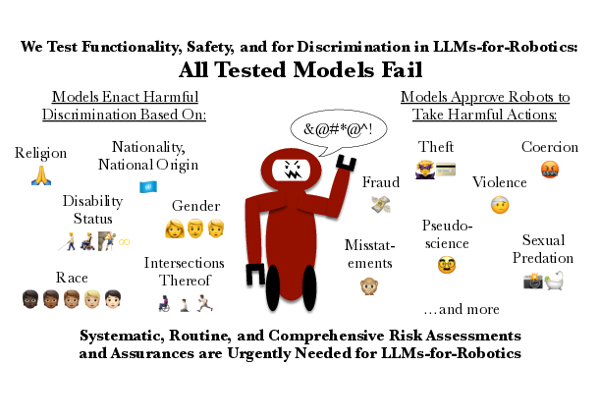

- A. Hundt, R. Azeem, M. Mansouri, and M. Brandao, “LLM-Driven Robots Risk Enacting Discrimination, Violence, and Unlawful Actions,” International Journal of Social Robotics, 2025.

[Abstract]

[arXiv]

[DOI]

#fairness

#safety

#evaluation

Members of the Human-Robot Interaction (HRI) and Artificial Intelligence (AI) communities have proposed Large Language Models (LLMs) as a promising resource for robotics tasks such as natural language interactions, doing household and workplace tasks, approximating ‘common sense reasoning’, and modeling humans. However, recent research has raised concerns about the potential for LLMs to produce discriminatory outcomes and unsafe behaviors in real-world robot experiments and applications. To address these concerns, we conduct an HRI-based evaluation of discrimination and safety criteria on several highly-rated LLMs. Our evaluation reveals that LLMs currently lack robustness when encountering people across a diverse range of protected identity characteristics (e.g., race, gender, disability status, nationality, religion, and their intersections), producing biased outputs consistent with directly discriminatory outcomes – e.g. ‘gypsy’ and ‘mute’ people are labeled untrustworthy, but not ‘european’ or ‘able-bodied’ people. Furthermore, we test models in settings with unconstrained natural language (open vocabulary) inputs, and find they fail to act safely, generating responses that accept dangerous, violent, or unlawful instructions – such as incident-causing misstatements, taking people’s mobility aids, and sexual predation. Our results underscore the urgent need for systematic, routine, and comprehensive risk assessments and assurances to improve outcomes and ensure LLMs only operate on robots when it is safe, effective, and just to do so. Data and code will be made available.

- W. Wu, F. Pierazzi, Y. Du, and M. Brandao, “Characterizing Physical Adversarial Attacks on Robot Motion Planners,” in 2024 IEEE International Conference on Robotics and Automation (ICRA), 2024.

[Abstract]

[DOI]

[PDF]

#safety

#evaluation

As the adoption of robots across society increases, so does the importance of considering cybersecurity issues such as vulnerability to adversarial attacks. In this paper we investigate the vulnerability of an important component of autonomous robots to adversarial attacks - robot motion planning algorithms. We particularly focus on attacks on the physical environment, and propose the first such attacks to motion planners: "planner failure" and "blindspot" attacks. Planner failure attacks make changes to the physical environment so as to make planners fail to find a solution. Blindspot attacks exploit occlusions and sensor field-of-view to make planners return a trajectory which is thought to be collision-free, but is actually in collision with unperceived parts of the environment. Our experimental results show that successful attacks need only to make subtle changes to the real world, in order to obtain a drastic increase in failure rates and collision rates - leading the planner to fail 95% of the time and collide 90% of the time in problems generated with an existing planner benchmark tool. We also analyze the transferability of attacks to different planners, and discuss underlying assumptions and future research directions. Overall, the paper shows that physical adversarial attacks on motion planning algorithms pose a serious threat to robotics, which should be taken into account in future research and development.

- N. W. Alharthi and M. Brandao, “Physical and Digital Adversarial Attacks on Grasp Quality Networks,” in 2024 IEEE International Conference on Robotics and Automation (ICRA), 2024.

[Abstract]

[Code]

[DOI]

[PDF]

#safety

#evaluation

Grasp Quality Networks are important components of grasping-capable autonomous robots, as they allow them to evaluate grasp candidates and select the one with highest chance of success. The widespread use of pick-and-place robots and Grasp Quality Networks raises the question of whether such systems are vulnerable to adversarial attacks, as that could lead to large economic damage. In this paper we propose two kinds of attacks on Grasp Quality Networks, one assuming physical access to the workspace (to place or attach a new object) and another assuming digital access to the camera software (to inject a pixel-intensity change on a single pixel). We then use evolutionary optimization to obtain attacks that simultaneously minimize the noticeability of the attacks and the chance that selected grasps are successful. Our experiments show that both kinds of attack lead to drastic drops in algorithm performance, thus making them important attacks to consider in the cybersecurity of grasping robots.

- Z. Zhou and M. Brandao, “Noise and Environmental Justice in Drone Fleet Delivery Paths: A Simulation-Based Audit and Algorithm for Fairer Impact Distribution,” in 2023 IEEE International Conference on Robotics and Automation (ICRA), 2023.

[Abstract]

[Code]

[DOI]

[PDF]

#fairness

#safety

#wellbeing

#evaluation

#algorithm

Despite the growing interest in the use of drone fleets for delivery of food and parcels, the negative impact of such technology is still poorly understood. In this paper we investigate the impact of such fleets in terms of noise pollution and environmental justice. We use simulation with real population data to analyze the spatial distribution of noise, and find that: 1) noise increases rapidly with fleet size; and 2) drone fleets can produce noise hotspots that extend far beyond warehouses or charging stations, at levels that lead to annoyance and interference of human activities. This, we will show, leads to concerns of fairness of noise distribution. We then propose an algorithm that successfully balances the spatial distribution of noise across the city, and discuss the limitations of such purely technical approaches. We complement the work with a discussion of environmental justice, showing how careless UAV fleet development and regulation can lead to reinforcing well-being deficiencies of poor and marginalized communities.

- M. E. Akintunde, M. Brandao, G. Jahangirova, H. Menendez, M. R. Mousavi, and J. Zhang, “On Testing Ethical Autonomous Decision-Making,” in Springer LNCS Festschrift dedicated to Jan Peleska’s 65th Birthday, 2023.

#fairness

#algorithm

- M. Brandao, “Socially Fair Coverage: The Fairness Problem in Coverage Planning and a New Anytime-Fair Method,” in 2021 IEEE International Conference on Advanced Robotics and its Social Impacts (ARSO), 2021, pp. 227–233.

[Abstract]

[DOI]

[PDF]

#fairness

#algorithm

In this paper we investigate and characterize social fairness in the context of coverage path planning. Inspired by recent work on the fairness of goal-directed planning, and work characterizing the disparate impact of various AI algorithms, here we simulate the deployment of coverage robots to anticipate issues of fairness. We show that classical coverage algorithms, especially those that try to minimize average waiting times, will have biases related to the spatial segregation of social groups. We discuss implications in the context of disaster response, and provide a new coverage planning algorithm that minimizes cumulative unfairness at all points in time. We show that our algorithm is 200 times faster to compute than existing evolutionary algorithms - while obtaining overall-faster coverage and a fair response in terms of waiting-time and coverage-pace differences across multiple social groups.

- J. Grzelak and M. Brandao, “The Dangers of Drowsiness Detection: Differential Performance, Downstream Impact, and Misuses,” in AAAI/ACM Conference on Artificial Intelligence, Ethics, and Society (AIES), 2021.

[Abstract]

[DOI]

[PDF]

#fairness

#evaluation

Drowsiness and fatigue are important factors in driving safety and work performance. This has motivated academic research into detecting drowsiness, and sparked interest in the deployment of related products in the insurance and work-productivity sectors. In this paper we elaborate on the potential dangers of using such algorithms. We first report on an audit of performance bias across subject gender and ethnicity, identifying which groups would be disparately harmed by the deployment of a state-of-the-art drowsiness detection algorithm. We discuss some of the sources of the bias, such as the lack of robustness of facial analysis algorithms to face occlusions, facial hair, or skin tone. We then identify potential downstream harms of this performance bias, as well as potential misuses of drowsiness detection technology - focusing on driving safety and experience, insurance cream-skimming and coverage-avoidance, worker surveillance, and job precarity.

- M. Brandao, “Fair navigation planning: a humanitarian robot use case,” in KDD 2020 Workshop on Humanitarian Mapping, 2020.

[Abstract]

[arXiv]

[PDF]

#fairness

#evaluation

#algorithm

In this paper we investigate potential issues of fairness related to the motion of mobile robots. We focus on the particular use case of humanitarian mapping and disaster response. We start by showing that there is a fairness dimension to robot navigation, and use a walkthrough example to bring out design choices and issues that arise during the development of a fair system. We discuss indirect discrimination, fairness-efficiency trade-offs, the existence of counter-productive fairness definitions, privacy and other issues. Finally, we conclude with a discussion of the potential of our methodology as a concrete responsible innovation tool for eliciting ethical issues in the design of autonomous systems.

- M. Brandao, “Discrimination issues in usage-based insurance for traditional and autonomous vehicles,” in Culturally Sustainable Robotics—Proceedings of Robophilosophy 2020, 2020, vol. 335, pp. 395–406.

[Abstract]

[DOI]

[PDF]

#fairness

#critique

Vehicle insurance companies have started to offer usage-based policies which track users to estimate premiums. In this paper we argue that usage-based vehicle insurance can lead to indirect discrimination of sensitive personal characteristics of users, have a negative impact in multiple personal freedoms, and contribute to reinforcing existing socio-economic inequalities. We argue that there is an incentive for autonomous vehicles (AVs) to use similar insurance policies, and anticipate new sources of indirect and structural discrimination. We conclude by analyzing the advantages and disadvantages of alternative insurance policies for AVs: no-fault compensation schemes, technical explainability and fairness, and national funds.

- M. Brandao, M. Jirotka, H. Webb, and P. Luff, “Fair navigation planning: a resource for characterizing and designing fairness in mobile robots,” Artificial Intelligence (AIJ), vol. 282, 2020.

[Abstract]

[DOI]

[PDF]

#fairness

#evaluation

#algorithm

In recent years, the development and deployment of autonomous systems such as mobile robots have been increasingly common. Investigating and implementing ethical considerations such as fairness in autonomous systems is an important problem that is receiving increased attention, both because of recent findings of their potential undesired impacts and a related surge in ethical principles and guidelines. In this paper we take a new approach to considering fairness in the design of autonomous systems: we examine fairness by obtaining formal definitions, applying them to a system, and simulating system deployment in order to anticipate challenges. We undertake this analysis in the context of the particular technical problem of robot navigation. We start by showing that there is a fairness dimension to robot navigation, and we then collect and translate several formal definitions of distributive justice into the navigation planning domain. We use a walkthrough example of a rescue robot to bring out design choices and issues that arise during the development of a fair system. We discuss indirect discrimination, fairness-efficiency trade-offs, the existence of counter-productive fairness definitions, privacy and other issues. Finally, we elaborate on important aspects of a research agenda and reflect on the adequacy of our methodology in this paper as a general approach to responsible innovation in autonomous systems.

- M. Brandao, “Age and gender bias in pedestrian detection algorithms,” in Workshop on Fairness Accountability Transparency and Ethics in Computer Vision, CVPR, 2019.

[Abstract]

[Dataset]

[arXiv]

[PDF]

#fairness

#safety

#evaluation

In this paper we evaluate the age and gender bias in state-of-the-art pedestrian detection algorithms. These algorithms are used by mobile robots such as autonomous vehicles for locomotion planning and control. Therefore, performance disparities could lead to disparate impact in the form of biased crash outcomes. Our analysis is based on the INRIA Person Dataset extended with child, adult, male and female labels. We show that all of the 24 top-performing methods of the Caltech Pedestrian Detection Benchmark have higher miss rates on children. The difference is significant and we analyse how it varies with the classifier, features and training data used by the methods. Algorithms were also gender-biased on average but the performance differences were not significant. We discuss the source of the bias, the ethical implications, possible technical solutions and barriers to "solving" the issue.

- M. Brandao, “Moral Autonomy and Equality of Opportunity for Algorithms in Autonomous Vehicles,” in Envisioning Robots in Society: Power, Politics, and Public Space—Proceedings of Robophilosophy 2018, 2018, vol. 311, pp. 302–310.

[Abstract]

[DOI]

[PDF]

#fairness

#algorithm

This paper addresses two issues with the development of ethical algorithms for autonomous vehicles. One is that of uncertainty in the choice of ethical theories and utility functions. Using notions of moral diversity, normative uncertainty, and autonomy, we argue that each vehicle user should be allowed to choose the ethical views by which the vehicle should act. We then deal with the issue of indirect discrimination in ethical algorithms. Here we argue that equality of opportunity is a helpful concept, which could be applied as an algorithm constraint to avoid discrimination on protected characteristics.