Explainable robotics

Characterizing and implementing explainability requirements for robot systems.

Publications

- W. Wu and M. Brandao, “Robot Arms Too Short? Explaining Motion Planning Failures using Design Optimization,” in IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), 2025.

[Abstract]

[DOI]

[PDF]

#algorithm

Motion planning algorithms are a fundamental component of robotic systems. Unfortunately, as shown by recent literature, their lack of explainability makes it difficult to understand and diagnose planning failures. The feasibility of a motion planning problem depends heavily on the robot model, which can be a major reason for failure. We propose a method that automatically generates explanations of motion planner failure based on robot design. When a planner is not able to find a feasible solution to a problem, we compute a minimum modification to the robot’s design that would enable the robot to complete the task. This modification then serves as an explanation of the type: "the planner could not solve the problem because robot links X are not long enough". We demonstrate how this explanation conveys what the robot is doing, why it fails, and how the failure could be recovered if the robot had a different design. We evaluate our method through a user study, which shows our explanations help users better understand robot intent, cause of failure and recovery, compared to other methods. Moreover, users were more satisfied with our method’s explanations, and reported that they understood the capabilities of the robot better after exposure to the explanations.

- Q. Liu and M. Brandao, “Generating Environment-based Explanations of Motion Planner Failure: Evolutionary and Joint-Optimization Algorithms,” in 2024 IEEE International Conference on Robotics and Automation (ICRA), 2024.

[Abstract]

[DOI]

[PDF]

#algorithm

Motion planning algorithms are important components of autonomous robots, which are difficult to understand and debug when they fail to find a solution to a problem. In this paper we propose a solution to the failure-explanation problem, which are automatically-generated environment-based explanations. These explanations reveal the objects in the environment that are responsible for the failure, and how their location in the world should change so as to make the planning problem feasible. Concretely, we propose two methods - one based on evolutionary optimization and another on joint trajectory-and-environment continuous-optimization. We show that the evolutionary method is well-suited to explain sampling-based motion planners, or even optimization-based motion planners in situations where computation speed is not a concern (e.g. post-hoc debugging). However, the optimization-based method is 4000 times faster and thus more attractive for interactive applications, even though at the cost of a slightly lower success rate. We demonstrate the capabilities of the methods through concrete examples and quantitative evaluation.

- K. Alsheeb and M. Brandao, “Towards Explainable Road Navigation Systems,” in IEEE International Conference on Intelligent Transportation Systems (ITSC), 2023.

[Abstract]

[Code]

[DOI]

[PDF]

#algorithm

Road navigation systems are important systems for pedestrians, drivers, and autonomous vehicles. Routes provided by such systems can be unintuitive, and may not contribute to an improvement of users’ mental models of maps and traffic. Automatically-generated explanations have the potential to solve these problems. Towards this goal, in this paper we propose algorithms for the generation of explanations for routes, based on properties of the road networks and traffic. We use a combination of inverse optimization and diverse shortest path algorithms to provide optimal explanations to questions of the type "why is path A fastest, rather than path B (which the user provides)?", and "why does the fastest path not go through waypoint W (which the user provides)?". The explanations reveal properties of the map - such as speed limits, congestion and road closure - that are not compatible with users’ expectations, and the knowledge of which would make users prefer the system’s path. We demonstrate the explanation algorithms on real map and traffic data, and conduct an evaluation of the properties of the algorithms.

- R. Eifler, M. Brandao, A. Coles, J. Frank, and J. Hoffman, “Evaluating Plan-Property Dependencies: A Web-Based Platform and User Study,” in Proceedings of the International Conference on Automated Planning and Scheduling (ICAPS), 2022.

[Abstract]

[DOI]

[PDF]

#evaluation

#algorithm

#userstudy

The trade-offs between different desirable plan properties - e.g. PDDL temporal plan preferences - are often difficult to understand. Recent work addresses this by iterative planning with explanations elucidating the dependencies between such plan properties. Users can ask questions of the form ’Why does the plan not satisfy property p?’, which are answered by ’Because then we would have to forego q’. It has been shown that such dependencies can be computed reasonably efficiently. But is this form of explanation actually useful for users? We run a large crowd-worker user study (N = 100 in each of 3 domains) evaluating that question. To enable such a study in the first place, we contribute a Web-based platform for iterative planning with explanations, running in standard browsers. Comparing users with vs. without access to the explanations, we find that the explanations enable users to identify better trade-offs between the plan properties, indicating an improved understanding of the planning task.

- M. Brandao and Y. Setiawan, “’Why Not This MAPF Plan Instead?’ Contrastive Map-based Explanations for Optimal MAPF,” in ICAPS 2022 Workshop on Explainable AI Planning (XAIP), 2022.

[Abstract]

[Code]

[PDF]

#algorithm

Multi-Agent Path Finding (MAPF) plans can be very complex to analyze and understand. Recent user studies have shown that explanations would be a welcome tool for MAPF practitioners and developers to better understand plans, as well as to tune map layouts and cost functions. In this paper we formulate two variants of an explanation problem in MAPF that we call contrastive "map-based explanation". The problem consists of answering the question "why don’t agents A follow paths P’ instead?"—by finding regions of the map that would have to be an obstacle in order for the expected plan to be optimal. We propose three different methods to compute these explanations, and evaluate them quantitatively on a set of benchmark problems that we make publicly available. Motivations for generating this type of explanation are discussed in the paper and include both user understanding of MAPF problems, and designer-aids to guide the improvement of map layouts.

- M. Brandao, M. Mansouri, A. Mohammed, P. Luff, and A. Coles, “Explainability in Multi-Agent Path/Motion Planning: User-study-driven Taxonomy and Requirements,” in International Conference on Autonomous Agents and Multiagent Systems (AAMAS), 2022, pp. 172–180.

[Abstract]

[DOI]

[PDF]

#evaluation

#userstudy

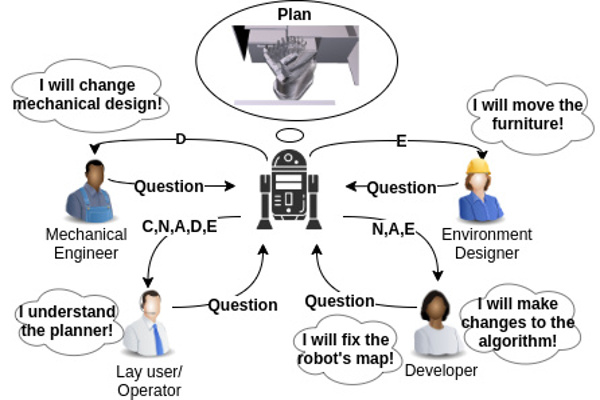

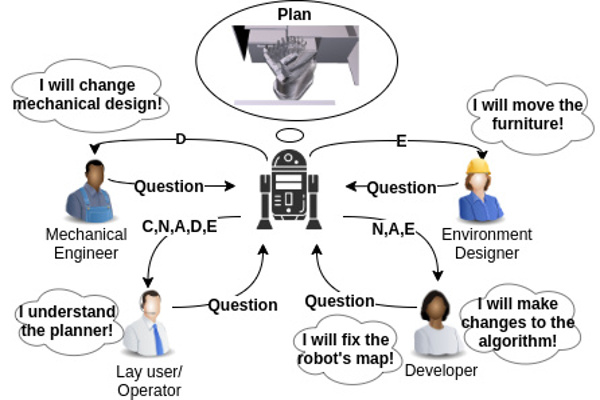

Multi-Agent Path Finding (MAPF) and Multi-Robot Motion Planning (MRMP) are complex problems to solve, analyze and build algorithms for. Automatically-generated explanations of algorithm output, by improving human understanding of the underlying problems and algorithms, could thus lead to better user experience, developer knowledge, and MAPF/MRMP algorithm designs. Explanations are contextual, however, and thus developers need a good understanding of the questions that can be asked about algorithm output, the kinds of explanations that exist, and the potential users and uses of explanations in MAPF/MRMP applications. In this paper we provide a first step towards establishing a taxonomy of explanations, and a list of requirements for the development of explainable MAPF/MRMP planners. We use interviews and a questionnaire with expert developers and industry practitioners to identify the kinds of questions, explanations, users, uses, and requirements of explanations that should be considered in the design of such explainable planners. Our insights cover a diverse set of applications: warehouse automation, computer games, and mining.

- M. Brandao, A. Coles, and D. Magazzeni, “Explaining Path Plan Optimality: Fast Explanation Methods for Navigation Meshes Using Full and Incremental Inverse Optimization,” in Proceedings of the International Conference on Automated Planning and Scheduling (ICAPS), 2021, pp. 56–64.

[Abstract]

[Code]

[DOI]

[PDF]

#algorithm

Path planners are important components of various products from video games to robotics, but their output can be counter-intuitive due to problem complexity. As a step towards improving the understanding of path plans by various users, here we propose methods that generate explanations for the optimality of paths. Given the question "why is path A optimal, rather than B which I expected?", our methods generate an explanation based on the changes to the graph that make B the optimal path. We focus on the case of path planning on navigation meshes, which are heavily used in the computer game industry and robotics. We propose two methods - one based on a single inverse-shortest-paths optimization problem, the other incrementally solving complex optimization problems. We show that these methods offer computation time improvements of up to 3 orders of magnitude relative to domain-independent search-based methods, as well as scaling better with the length of explanations. Finally, we show through a user study that, when compared to baseline cost-based explanations, our explanations are more satisfactory and effective at increasing users’ understanding of problems.

- M. Brandao, G. Canal, S. Krivic, P. Luff, and A. Coles, “How experts explain motion planner output: a preliminary user-study to inform the design of explainable planners,” in IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), 2021, pp. 299–306.

[Abstract]

[DOI]

[PDF]

#evaluation

#userstudy

Motion planning is a hard problem that can often overwhelm both users and designers: due to the difficulty in understanding the optimality of a solution, or reasons for a planner to fail to find any solution. Inspired by recent work in machine learning and task planning, in this paper we are guided by a vision of developing motion planners that can provide reasons for their output - thus potentially contributing to better user interfaces, debugging tools, and algorithm trustworthiness. Towards this end, we propose a preliminary taxonomy and a set of important considerations for the design of explainable motion planners, based on the analysis of a comprehensive user study of motion planning experts. We identify the kinds of things that need to be explained by motion planners ("explanation objects"), types of explanation, and several procedures required to arrive at explanations. We also elaborate on a set of qualifications and design considerations that should be taken into account when designing explainable methods. These insights contribute to bringing the vision of explainable motion planners closer to reality, and can serve as a resource for researchers and developers interested in designing such technology.

- R. Eifler, M. Brandao, A. Coles, J. Frank, and J. Hoffman, “Plan-Property Dependencies are Useful: A User Study,” in ICAPS 2021 Workshop on Explainable AI Planning (XAIP), 2021.

[Abstract]

[PDF]

#evaluation

#userstudy

The trade-offs between different desirable plan properties - e.g. PDDL temporal plan preferences - are often difficult to understand. Recent work proposes to address this by iterative planning with explanations elucidating the dependencies between such plan properties. Users can ask questions of the form ’Why does the plan you suggest not satisfy property p?’, which are answered by ’Because then we would have to forego q’ where not-q is entailed by p in plan space. It has been shown that such plan-property dependencies can be computed reasonably efficiently. But is this form of explanation actually useful for users? We contribute a user study evaluating that question. We design use cases from three domains and run a large user study (N = 40 for each domain, ca. 40 minutes work time per user and domain) on the internet platform Prolific. Comparing users with vs. without access to the explanations, we find that the explanations tend to enable users to identify better trade-offs between the plan properties, indicating an improved understanding of the task.

- M. Brandao, G. Canal, S. Krivic, and D. Magazzeni, “Towards providing explanations for robot motion planning,” in 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 3927–3933.

[Abstract]

[DOI]

[PDF]

#evaluation

#algorithm

#userstudy

Recent research in AI ethics has put forth explainability as an essential principle for AI algorithms. However, it is still unclear how this is to be implemented in practice for specific classes of algorithms - such as motion planners. In this paper we unpack the concept of explanation in the context of motion planning, introducing a new taxonomy of kinds and purposes of explanations in this context. We focus not only on explanations of failure (previously addressed in motion planning literature) but also on contrastive explanations - which explain why a trajectory A was returned by a planner, instead of a different trajectory B expected by the user. We develop two explainable motion planners, one based on optimization, the other on sampling, which are capable of answering failure and constrastive questions. We use simulation experiments and a user study to motivate a technical and social research agenda.

- M. Brandao and D. Magazzeni, “Explaining plans at scale: scalable path planning explanations in navigation meshes using inverse optimization,” in IJCAI 2020 Workshop on Explainable Artificial Intelligence (XAI), 2020.

[Abstract]

[PDF]

#algorithm

In this paper we propose methods that provide explanations for path plans, in particular those that answer questions of the type "why is path A optimal, rather than path B which I expected?". In line with other work in eXplainable AI Planning (XAIP), such explanations could help users better understand the outputs of path planning methods, as well as help debug or iterate the design of planners and maps. By specializing the explanation methods to path planning, using optimization-based inverse-shortest-paths formulations, we obtain drastic computation time improvements relative to general XAIP methods, especially as the length of the explanations increases. One of the claims of this paper is that such specialization might be required for explanation methods to scale and therefore come closer to real-world usability. We propose and evaluate the methods on large-scale navigation meshes, which are representations for path planning heavily used in the computer game industry and robotics.